Illustration: Tim Bower

THERE’S A CERTAIN AGE at which you cease to regard your own death as a distant hypothetical and start to view it as a coming event. For me, it was 67—the age at which my father died. For many Americans, I suspect it’s 70—the age that puts you within striking distance of our average national life expectancy of 78.1 years. Even if you still feel pretty spry, you suddenly find that your roster of doctor’s appointments has expanded, along with your collection of daily medications. You grow accustomed to hearing that yet another person you once knew has dropped off the twig. And you feel more and more like a walking ghost yourself, invisible to the younger people who push past you on the subway escalator. Like it or not, death becomes something you think about, often on a daily basis.

Actually, you don’t think about death, per se, as much as you do about dying—about when and where and especially how you’re going to die. Will you have to deal with a long illness? With pain, immobility, or dementia? Will you be able to get the care you need, and will you have enough money to pay for it? Most of all, will you lose control over what life you have left, as well as over the circumstances of your death?

These are precisely the preoccupations that the right so cynically exploited in the debate over health care reform, with that ominous talk of Washington bean counters deciding who lives and dies. It was all nonsense, of course—the worst kind of political scare tactic. But at the same time, supporters of health care reform seemed to me too quick to dismiss old people’s fears as just so much paranoid foolishness. There are reasons why the death-panel myth found fertile ground—and those reasons go beyond the gullibility of half-senile old farts.

While politicians of all stripes shun the idea of health care rationing as the political third rail that it is, most of them accept a premise that leads, one way or another, to that end. Here’s what I mean: Nearly every other industrialized country recognizes health care as a human right, whose costs and benefits are shared among all citizens. But in the United States, the leaders of both political parties along with most of the “experts” persist in treating health care as a commodity that is purchased, in one way or another, by those who can afford it. Conservatives embrace this notion as the perfect expression of the all-powerful market; though they make a great show of recoiling from the term, in practice they are endorsing rationing on the basis of wealth. Liberals, including supporters of President Obama’s health care reform, advocate subsidies, regulation, and other modest measures to give the less fortunate a little more buying power. But as long as health care is viewed as a product to be bought and sold, even the most well-intentioned reformers will someday soon have to come to grips with health care rationing, if not by wealth then by some other criteria.

In a country that already spends more than 16 percent of each GDP dollar on health care (PDF), it’s easy to see why so many people believe there’s simply not enough of it to go around. But keep in mind that the rest of the industrialized world manages to spend between 20 and 90 percent less per capita and still rank higher than the US in overall health care performance. In 2004, a team of researchers including Princeton’s Uwe Reinhardt, one of the nation’s best known experts on health economics, found that while the US spends 134 percent more than the median of the world’s most developed nations, we get less for our money—fewer physician visits and hospital days per capita, for example—than our counterparts in countries like Germany, Canada, and Australia. (We do, however, have more MRI machines and more cesarean sections.)

Where does the money go instead? By some estimates, administration and insurance profits alone eat up at least 30 percent of our total health care bill (and most of that is in the private sector—Medicare’s overhead is around 2 percent). In other words, we don’t have too little to go around—we overpay for what we get, and we don’t allocate our spending where it does us the most good. “In most [medical] resources we have a surplus,” says Dr. David Himmelstein, cofounder of Physicians for a National Health Program. “People get large amounts of care that don’t do them any good and might cause them harm [while] others don’t get the necessary amount.”

Looking at the numbers, it’s pretty safe to say that with an efficient health care system, we could spend a little less than we do now and provide all Americans with the most spectacular care the world has ever known. But in the absence of any serious challenge to the health-care-as-commodity system, we are doomed to a battlefield scenario where Americans must fight to secure their share of a “scarce” resource in a life-and-death struggle that pits the rich against the poor, the insured against the uninsured—and increasingly, the old against the young.

For years, any push to improve the nation’s finances—balance the budget, pay for the bailout, or help stimulate the economy—has been accompanied by rumblings about the greedy geezers who resist entitlement “reforms” (read: cuts) with their unconscionable demands for basic health care and a hedge against destitution. So, too, today: Already, President Obama’s newly convened deficit commission looks to be blaming the nation’s fiscal woes not on tax cuts, wars, or bank bailouts, but on the burden of Social Security and Medicare. (The commission’s co-chair, former Republican senator Alan Simpson, has declared, “This country is gonna go to the bow-wows unless we deal with entitlements.”)

Old people’s anxiety in the face of such hostile attitudes has provided fertile ground for Republican disinformation and fearmongering. But so has the vacuum left by Democratic reformers. Too often, in their zeal to prove themselves tough on “waste,” they’ve allowed connections to be drawn between two things that, to my mind, should never be spoken of in the same breath: death and cost.

Dying Wishes

The death-panel myth started with a harmless minor provision in the health reform bill that required Medicare to pay in case enrollees wanted to have conversations with their own doctors about “advance directives” like health care proxies and living wills. The controversy that ensued, thanks to a host of right-wing commentators and Sarah Palin’s Facebook page, ensured that the advance-planning measure was expunged from the bill. But the underlying debate didn’t end with the passage of health care reform, any more than it began there. For if rationing is inevitable once you’ve ruled out reining in private profits, the question is who should be denied care, and at what point. And given that no one will publicly argue for withholding cancer treatment from a seven-year-old, the answer almost inevitably seems to come down to what we spend on people—old people—in their final years.

As far back as 1983, in a speech to the Health Insurance Association of America, a then-57-year-old Alan Greenspan suggested that we consider “whether it is worth it” to spend so much of Medicare’s outlays on people who would die within the year. (Appropriately, Ayn Rand called her acolyte “the undertaker”—though she chose the nickname because of his dark suits and austere demeanor.)

Not everyone puts the issue in such nakedly pecuniary terms, but in an April 2009 interview with the New York Times Magazine, Obama made a similar point in speaking of end-of-life care as a “huge driver of cost.” He said, “The chronically ill and those toward the end of their lives are accounting for potentially 80 percent of the total health care bill out here.”

The president was being a bit imprecise: Those figures are actually for Medicare expenditures, not the total health care tab, and more important, lumping the dying together with the “chronically ill”—who often will live for years or decades—makes little sense. But there is no denying that end-of-life care is expensive. Hard numbers are not easy to come by, but studies from the 1990s suggest that between a quarter and a third of annual Medicare expenditures go to patients in their last year of life, and 30 to 40 percent of those costs accrue in the final month. What this means is that around one in ten Medicare dollars—some $50 billion a year—are spent on patients with fewer than 30 days to live.

Pronouncements on these data usually come coated with a veneer of compassion and concern: How terrible it is that all those poor dying old folks have to endure aggressive treatments that only delay the inevitable; all we want to do is bring peace and dignity to their final days! But I wonder: If that’s really what they’re worried about, how come they keep talking about money?

At this point, I ought to make something clear: I am a big fan of what’s sometimes called the “right to die” or “death with dignity” movement. I support everything from advance directives to assisted suicide. You could say I believe in one form of health care rationing: the kind you choose for yourself. I can’t stand the idea of anyone—whether it’s the government or some hospital administrator or doctor or Nurse Jackie—telling me that I must have some treatment I don’t want, any more than I want them telling me that I can’t have a treatment I do want. My final wish is to be my own one-member death panel.

A physician friend recently told me about a relative of hers, a frail 90-year-old woman suffering from cancer. Her doctors urged her to have surgery, followed by treatment with a recently approved cancer medicine that cost $5,000 a month. As is often the case, my friend said, the doctors told their patient about the benefits of the treatment, but not about all the risks—that she might die during the surgery or not long afterward. They also prescribed a month’s supply of the new medication, even though, my friend says, they must have known the woman was unlikely to live that long. She died within a week. “Now,” my friend said, “I’m carrying around a $4,000 bottle of pills.”

Perhaps reflecting what economists call “supplier-induced demand,” costs generally tend to go up when the dying have too little control over their care, rather than too much. When geezers are empowered to make decisions, most of us will choose less aggressive—and less costly—treatments. If we don’t do so more often, it’s usually because of an overbearing and money-hungry health care system, as well as a culture that disrespects the will of its elders and resists confronting death.

Once, when I was in the hospital for outpatient surgery, I woke up in the recovery area next to a man named George, who was talking loudly to his wife, telling her he wanted to leave. She soothingly reminded him that they had to wait for the doctors to learn the results of the surgery, apparently some sort of exploratory thing. Just then, two doctors appeared. In a stiff, flat voice, one of them told George that he had six months to live. When his wife’s shrieking had subsided, I heard George say, “I’m getting the fuck out of this place.” The doctors sternly advised him that they had more tests to run and “treatment options” to discuss. “Fuck that,” said George, yanking the IV out of his arm and getting to his feet. “If I’ve got six months to live, do you think I want to spend another minute of it here? I’m going to the Alps to go skiing.”

I don’t know whether George was true to his word. But not long ago I had a friend, a scientist, who was true to his. Suffering from cancer, he anticipated a time when more chemotherapy or procedures could only prolong a deepening misery, to the point where he could no longer recognize himself. He prepared for that time, hoarding his pain meds, taking care to protect his doctor and pharmacist from any possibility of legal retribution. He saw some friends he wanted to see, and spoke to others. Then he died at a time and place of his choosing, with his family around him. Some would call this euthanasia, others a sacrilege. To me, it seemed like a noble end to a fine life. If freedom of choice is what makes us human, then my friend managed to make his death a final expression of his humanity.

My friend chose to forgo medical treatments that would have added many thousands of dollars to his health care costs—and, since he was on Medicare, to the public expense. If George really did spend his final months in the Alps, instead of undergoing expensive surgeries or sitting around hooked up to machines, he surely saved the health care system a bundle as well. They did it because it was what they wanted, not because it would save money. But there is a growing body of evidence that the former can lead to the latter—without any rationing or coercion.

One model that gets cited a lot these days is La Crosse, Wisconsin, where Gundersen Lutheran hospital launched an initiative to ensure that the town’s older residents had advance directives and to make hospice and palliative care widely available. A 2008 study found that 90 percent of those who died in La Crosse under a physician’s care did so with advance directives in place. At Gundersen Lutheran, less is spent on patients in their last two years of life than nearly any other place in the US, with per capita Medicare costs 30 percent below the national average. In a similar vein, Oregon in 1995 instituted a two-page form called Physician Orders for Life-Sustaining Treatment; it functions as doctor’s orders and is less likely to be misinterpreted or disregarded than a living will. According to the Dartmouth Atlas of Health Care, a 20-year study of the nation’s medical costs and resources, people in Oregon are less likely to die in a hospital than people in most other states, and in their last six months, they spend less time in the hospital. They also run up about 50 percent less in medical expenditures.

It’s possible that attitudes have begun to change. Three states now allow what advocates like to call “aid-in-dying” (rather than assisted suicide) for the terminally ill. More Americans than ever have living wills and other advance directives, and that can only be a good thing: One recent study showed that more than 70 percent of patients who needed to make end-of-life decisions at some point lost the capacity to make these choices, yet among those who had prepared living wills, nearly all had their instructions carried out.

Here is the ultimate irony of the death-panel meme: In attacking measures designed to promote advance directives, conservatives were attacking what they claim is their core value—the individual right to free choice.

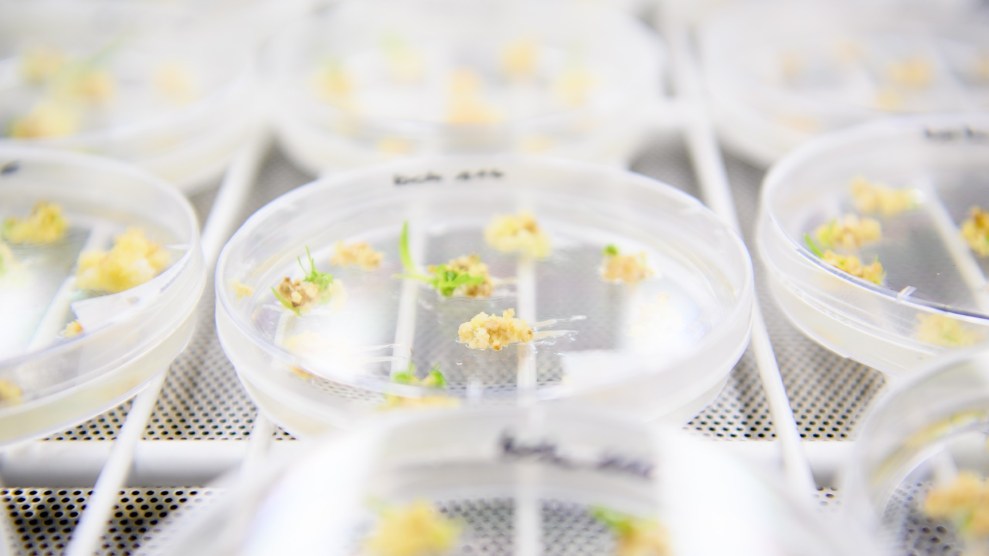

The QALY of Mercy

A wonkier version of the reform-equals-rationing argument is based less on panic mongering about Obama’s secret euthanasia schemes and more on the implications of something called “comparative effectiveness research.” The practice got a jump start in last year’s stimulus bill, which included $1.1 billion for the Federal Coordinating Council for Comparative Effectiveness Research. This is money to study what treatments work best for which patients. The most obvious use of such data would be to apply the findings to Medicare, and the effort has already been attacked as the first step toward the government deciding when it’s time to kick granny to the curb. Senate minority leader Mitch McConnell (R-Ky.) has said that Obama’s support for comparative effectiveness research means he is seeking “a national rationing board.”

Evidence-based medicine, in itself, has absolutely nothing to do with age. In theory, it also has nothing to do with money—though it might, as a byproduct, reduce costs (for example, by giving doctors the information they need to resist pressure from drug companies). Yet the desire for cost savings often seems to drive comparative effectiveness research, rather than the other way around. In his Times Magazine interview last year, Obama said, “It is an attempt to say to patients, you know what, we’ve looked at some objective studies concluding that the blue pill, which costs half as much as the red pill, is just as effective, and you might want to go ahead and get the blue one.”

Personally, I don’t mind the idea of the government promoting the blue pill over the red pill, as long as it really is “just as effective.” I certainly trust the government to make these distinctions more than I trust the insurance companies or pharma reps. But I want to know that the only target is genuine waste, and the only possible casualty is profits.

There’s nothing to give me pause in the health care law’s comparative effectiveness provision, which includes $500 million a year for comparative effectiveness research. The work is to be overseen by the nonprofit Patient-Centered Outcomes Research Institute, whose 21-member board of governors will include doctors, patient advocates, and only three representatives of drug and medical-device companies.

Still, there’s a difference between comparative effectiveness and comparative cost effectiveness—and from the latter, it’s a short skip to outright cost-benefit analysis. In other words, the argument sometimes slides almost imperceptibly from comparing how well the blue pill and the red pill work to examining whether some people should be denied the red pill, even if it demonstrably works better.

The calculations driving such cost-benefit analyses are often based on something called QALYs—quality-adjusted life years. If a certain cancer drug would extend life by two years, say, but with such onerous side effects that those years were judged to be only half as worth living as those of a healthy person, the QALY is 1.

In Britain, the National Health Service has come close to setting a maximum price beyond which extra QALYs are not deemed worthwhile. In assessing drugs and treatments, the NHS’s National Institute for Health and Clinical Excellence usually approves those that cost less than 20,000 pounds per QALY (about $28,500), and most frequently rejects those costing more than 30,000 pounds (about $43,000).

It’s not hard to find examples of comparative effectiveness research—complete with QALYs—that hit quite close to home for almost anyone. Last year I was diagnosed with atrial fibrillation, a disturbance in the heart rhythm that sometimes leads to blood clots, which can travel to the brain and cause a stroke. My doctor put me on warfarin (brand name Coumadin), a blood-thinning drug that reduces the chances of forming blood clots but can also cause internal bleeding. It’s risky enough that when I go to the dentist or cut myself shaving, I have to watch to make sure it doesn’t turn into a torrent of blood. The levels of warfarin in my bloodstream have to be frequently checked, so I have to be ever mindful of the whereabouts of a hospital with a blood lab. It is a pain in the neck, and it makes me feel vulnerable. I sometimes wonder if it’s worth it.

It turns out that several comparative effectiveness studies have looked at the efficacy of warfarin for patients with my heart condition. One of them simply weighed the drug’s potential benefits against its dangerous side effects, without consideration of cost. It concluded that for a patient with my risk factors, warfarin reduced the chance of stroke a lot more than it increased the chance that I’d be seriously harmed by bleeding. Another study concluded that for a patient like me, the cost per QALY of taking warfarin is $8,000—cheap, by most standards.

Prescription drug prices have more than doubled since the study was done in 1995. But warfarin is a relatively cheap generic drug, and even if my cost per QALY was $15,000 or $20,000, I’d still pass muster with the NHS. But if I were younger and had fewer risk factors, I’d be less prone to stroke to begin with, so the reduction in risk would not be as large, and the cost per QALY would be correspondingly higher—about $370,000. Would I still want to take the drug if I were, say, under 60 and free of risk factors? Considering the side effects, probably not. But would I want someone else to make that decision for me?

Critics of the British system say, among other things, that the NHS’s cost-per-QALY limit is far too low. But raising it wouldn’t resolve the deeper ethical question: Should anyone but the patient get to decide when life is not worth living? The Los Angeles Times‘ Michael Hiltzik, one of the few reporters to critically examine this issue, has noted that “healthy people tend to overestimate the effect of some medical conditions on their sufferers’ quality of life. The hale and hearty, for example, will generally rate life in a wheelchair lower than will the wheelchair-bound, who often find fulfillment in ways ‘healthier’ persons couldn’t imagine.”

Simone de Beauvoir wrote that fear of aging and death drives young people to view their elders as a separate species, rather than as their future selves: “Until the moment it is upon us old age is something that only affects other people.” And the more I think about the subject, the more I am sure of one thing: It’s not a good idea to have a 30-year-old place a value on my life.

Whose Death Is It Anyway?

Probably the most prominent advocate of age-based rationing is Daniel Callahan, cofounder of a bioethics think tank called the Hastings Center. Callahan’s 1987 book, Setting Limits: Medical Goals in an Aging Society, depicted old people as “a new social threat,” a demographic, economic, and medical “avalanche” waiting to happen. In a 2008 article, Callahan said that in evaluating Medicare’s expenditures, we should consider that “there is a duty to help young people to become old people, but not to help the old become still older indefinitely…One may well ask what counts as ‘old’ and what is a decently long lifespan? As I have listened to people speak of a ‘full life,’ often heard at funerals, I would say that by 75-80 most people have lived a full life, and most of us do not feel it a tragedy that someone in that age group has died (as we do with the death of a child).” He has proposed using “age as a specific criterion for the allocation and limitation of care,” and argues that after a certain point, people could justifiably be denied Medicare coverage for life-extending treatments.

You can see why talk like this might make some old folks start boarding up their doors. (It apparently, however, does not concern Callahan, who last year at age 79 told the New York Times that he had just had a life-saving seven-hour heart procedure.) It certainly made me wonder how I would measure up.

So far, I haven’t cost the system all that much. I take several different meds every day, which are mostly generics. I go to the doctor pretty often, but I haven’t been in the hospital overnight for at least 20 years, and my one walk-in operation took place before I was on Medicare. And I am still working, so I’m paying in as well as taking out.

But things could change, perhaps precipitously. Since I have problems with both eyesight and balance, I could easily fall and break a bone, maybe a hip. This could mean a hip replacement, months of therapy, or even long-term immobility. My glaucoma could take a turn for the worse, and I would face a future of near blindness, with all the associated costs. Or I could have that stroke, in spite of my drug regimen.

I decided to take the issue up with the Australian philosopher Peter Singer, who made some waves on this issue with a New York Times Magazine piece published last year, titled “Why We Must Ration Health Care.” Singer believes that health care is a scarce resource that will inevitably be limited. Better to do it through a public system like the British NHS, he told me, than covertly and inequitably on the private US model. “What you are trying to do is to get the most value for the money from the resources you have,” he told me.

In the world he imagines, I asked Singer over coffee in a Manhattan café, what should happen if I broke a hip? He paused to think, and I hoped he wouldn’t worry about hurting my feelings. “If there is a good chance of restoring mobility,” he said after a moment, “and you have at least five years of mobility, that’s significant benefit.” He added, “Hip operations are not expensive.” A new hip or knee runs between $30,000 and $40,000, most of it covered by Medicare. So for five years of mobility, that comes out to about $7,000 a year—less than the cost of a home-care aide, and exponentially less than a nursing home.

But then Singer turned to a more sobering thought: If the hip operation did not lead to recovery of mobility, then it might not be such a bargain. In a much-cited piece of personal revelation, Obama in 2009 talked about his grandmother’s decision to have a hip replacement after she had been diagnosed with terminal cancer. She died just a few weeks later. “I don’t know how much that hip replacement cost,” Obama told the Times Magazine. “I would have paid out of pocket for that hip replacement just because she’s my grandmother.” But the president said that in considering whether “to give my grandmother, or everybody else’s aging grandparents or parents, a hip replacement when they’re terminally ill…you just get into some very difficult moral issues.”

Singer and I talked about what choices we ourselves might make at the end of our lives. Singer, who is 63, said that he and his wife “know neither of us wants to go on living under certain conditions. Particularly if we get demented. I would draw the line if I could not recognize my wife or my children. My wife has a higher standard—when she couldn’t read a novel. Yes, I wouldn’t want to live beyond a certain point. It’s not me anymore.” I’m 10 years older than Singer, and my own advance directives reflect similar choices. So it seems like neither one of us is likely to strain the public purse with our demands for expensive and futile life-prolonging care.

You can say this is all a Debbie Downer, but people my age know perfectly well that these questions are not at all theoretical. We worry about the time when we will no longer be able to contribute anything useful to society and will be completely dependent on others. And we worry about the day when life will no longer seem worth living, and whether we will have the courage—and the ability—to choose a dignified death. We worry about these things all by ourselves—we don’t need anyone else to do it for us. And we certainly don’t need anyone tallying up QALYs while our overpriced, underperforming private health care system adds a few more points to its profit margin.

Let It Bleed

What happened during the recent health care wars is what military strategists might call a “bait-and-bleed” operation: Two rival parties are drawn into a protracted conflict that depletes both their forces, while a third stands on the sidelines, its strength undiminished. In this case, Republicans and Democrats alike have shed plenty of blood, while the clever combatant on the sidelines is, of course, the health care industry.

In the process, health care reform set some unsettling precedents that could fuel the phony intergenerational conflict over health care resources. The final reform bill will help provide coverage to some of the estimated 46 million Americans under 65 who live without it. It finances these efforts in part by cutting Medicare costs—some $500 billion over 10 years. Contrary to Republican hysteria, the cuts so far come from all the right places—primarily from ending the rip-offs by insurers who sell government-financed “Medicare Advantage” plans. The reform law even manages to make some meaningful improvements to the flawed Medicare prescription drug program and preventive care. The legislation also explicitly bans age-based health care rationing.

Still, there are plenty of signs that the issue is far from being put to rest. Congress and the White House wrote into the law something called the Independent Payment Advisory Board, a presidentially appointed panel that is tasked with keeping Medicare’s growth rate below a certain ceiling. Office of Management and Budget director Peter Orszag, the economics wunderkind who has made Medicare’s finances something of a personal project, has called it potentially the most important aspect of the legislation: Medicare and Medicaid, he has said, “are at the heart of our long-term fiscal imbalance, which is the motivation for moving to a different structure in those programs.” And then, of course, there’s Obama’s deficit commission: While the president says he is keeping an open mind when it comes to solving the deficit “crisis,” no one is trying very hard to pretend that the commission has any purpose other than cutting Social Security, Medicare, and probably Medicaid as well.

Already, the commission is working closely with the Peter G. Peterson Foundation, headed by the billionaire businessman and former Nixon administration official who has emerged as one of the nation’s leading “granny bashers”—deficit hawks who accuse old people of bankrupting the country.

In the end, of course, many conservatives are motivated less by deficits and more by free-market ideology: Many of them want to replace Medicare as it now exists today with a system of vouchers, and place the emphasis on individual savings and tax breaks. Barring that, Republicans have proposed a long string of cuts to Medicaid and Medicare, sometimes defying logic—by, for example, advocating reductions in in-home care, which can keep people out of far more expensive nursing homes.

The common means of justifying these cuts is to attack Medicare “waste.” But remember that not only are Medicare’s administrative costs less than one-sixth of those of private insurers, Medicare pays doctors and hospitals less (20 and 30 percent, respectively) than private payment rates; overall, Medicare pays out less in annual per capita benefits than the average large employer health plan, even though it serves an older, sicker population.

That basic fact is fully understood by the health care industry. Back in January 2009, as the nation suited up for the health care wars, the Lewin Group—a subsidiary of the health insurance giant United Health—produced an analysis of various reform proposals being floated and found that the only one to immediately reduce overall health care costs (by $58 billion) was one that would have dramatically expanded Medicare.

Facts like these, however, have not slowed down the granny bashers. In a February op-ed called “The Geezers’ Crusade,” commentator David Brooks urged old people to willingly submit to entitlement cuts in service to future generations. Via Social Security and Medicare, he argued, old folks are stealing from their own grandkids.

I’m as public spirited as the next person, and I have a Gen X son. I’d be willing to give up some expensive, life-prolonging medical treatment for him, and maybe even for the good of humanity. But I’m certainly not going to do it so some WellPoint executive can take another vacation, so Pfizer can book $3 billion in annual profits instead of $2 billion, or so private hospitals can make another campaign contribution to some gutless politician.

Here, then, is my advice to anyone who suggests that we geezers should do the right thing and pull the plug on ourselves: Start treating health care as a human right instead of a profit-making opportunity, and see how much money you save. Then, by all means, get back to me.